Automated Driving and Lolaark Vision Technology

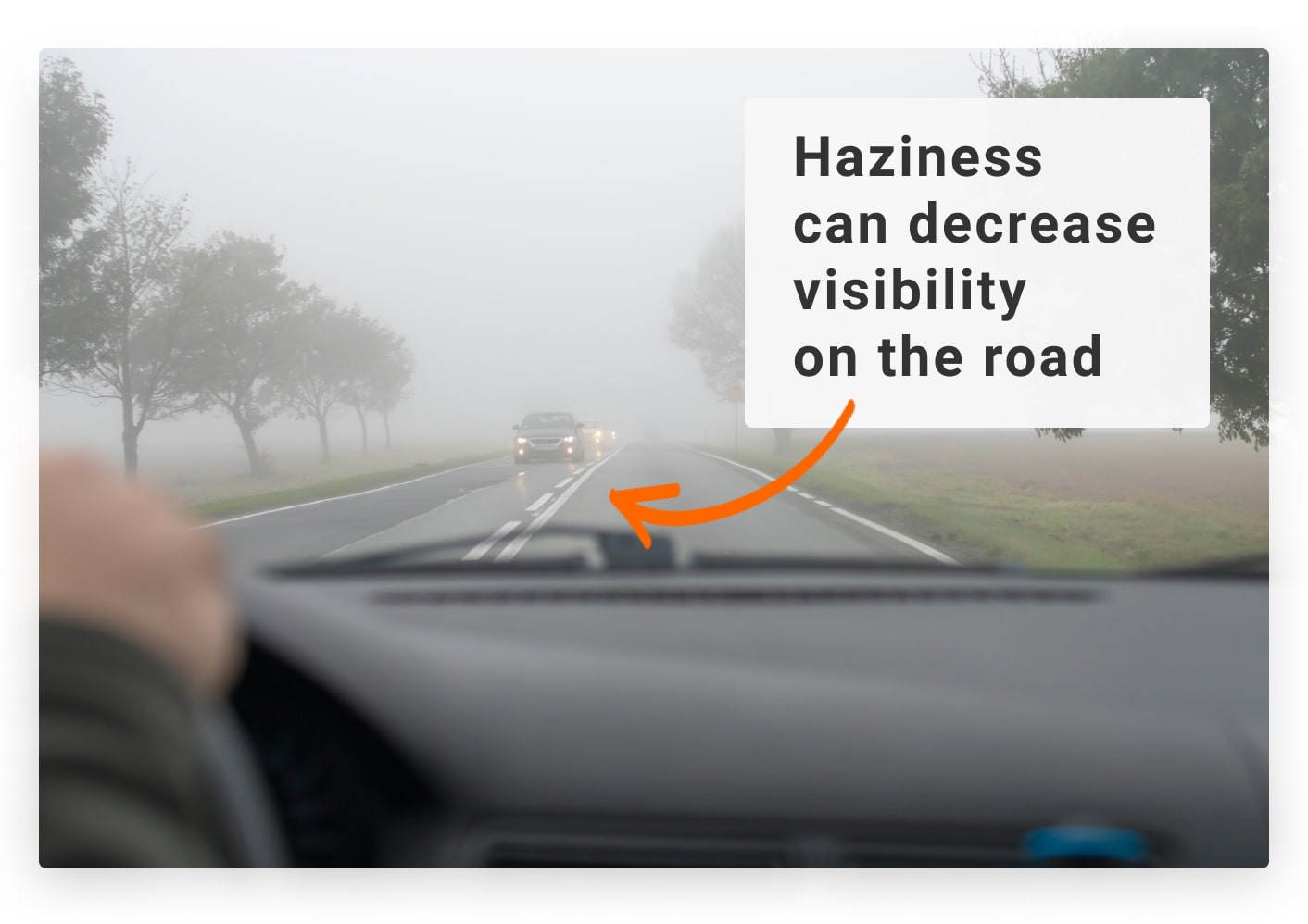

This is a continuation of our July 16th post. Driving automation software requires several complex constituent components to work fast and with a certain degree of accuracy. Key components of such a system are the car proximity sensors known as radars and the GPS. The system at any given time has to evaluate street conditions, obstacles, red lights, pedestrians other traffic. Radars help to detect proximal obstacles and e.g use the brakes to avoid a collision, but cameras play a major role in developing the AI-situational awareness of the system. Cameras can see a lot better than us. If you want proof of this fact simply take a picture with your cell phone of distant objects and compare the picture with your own view. You will immediately realize that certain details of distant objects not easily visible with the naked eye are easily visible in the picture. However, cameras do not always show or capture what is needed at any given time.

Cameras give great views when visibility and illumination are great but when there is rain, fog, or smoke. Let’s revisit the photos we included in the July 16th post. The pedestrian, who is actually one of our investors, walks on the street literally by the curb. The camera truly captures him but his image is covered by the darkness of the scene. Here we want to clarify one critical fact. LV brings up to the “surface” visual details. As you see in the photos we show the LV-processed photo makes the pedestrian visible enough for the object detector to discover him about 1 second before the same detector discovers him in the raw footage. In the top right and bottom left picture pairs, you see that we have detections in the raw and not in the LV-processed and vice versa. After 5 seconds from the initial detection in the LV-processed, we have the first dual detection but the distance of the vehicle to the pedestrian is now about 20 feet, not enough for a safe avoidance of the pedestrian.

The fact that LV can trigger a detection 1 second before the first detection of the pedestrian in the raw camera video stream and about 110 feet from the pedestrian indicates how LV can make a difference for camera-based driver assisting software. It also demonstrates how such systems are prone to fail and that drivers should be vigilant when they drive and not rely on automation. Cameras and detectors can help but we can’t fully rely on them. In a future post, we will discuss the rules on how the system integrates the visual information retrieved from the raw video stream and the LV-processed and ways to harness the synergistic effect of the detections resulting from the two streams.